Hand Gesture Recognition

Overview

People with dexterity issues often struggle to use a computer mouse for extended periods of time, which can limit their ability to use technology and access information. Additionally, with the increasing popularity of wearables, home automation, and gaming, there is a growing need for hands-free interaction with devices. The hand gesture recognition project aims to provide an accessible and intuitive interface for users with dexterity issues, as well as a convenient and efficient interface for all potential users. By recognizing and interpreting hand gestures, the system will enable users to control devices and interact with software without the need for a physical mouse or other input device. This technology has the potential to significantly improve accessibility and usability for people with dexterity issues, as well as enhance the overall user experience for a wide range of users.

Click here to view the research paper.

Collaborators

This project was part of my Research Assistantship at Indian Institute of Technology, Guwahati and contributions to this project are mentioned in detail below:

Spandita Sarmah: Web Page Design, Gesture Algorithm Implementation

Dr. M. K. Bhuyan: Ideation of concept and guidance in study of Gesture Recognition

Rahulraj Singh: Study of relevant research work and guidance with System Design

Dr. M. Uma: Guidance with Research Documentation

User Persona

Name: David Nguyen

Age: 35

Occupation: Accountant

Location: Washington DC

Education: Bachelor's degree in Accounting

Technology: Comfortable with using computers and mobile devices, but experiences difficulty using a mouse due to dexterity issues

Goals: David wants to be able to use technology without experiencing physical discomfort or limitations. He values accessibility, ease of use, and efficiency.

Pain Points: David finds it difficult and uncomfortable to use a computer mouse for extended periods of time due to his dexterity issues. This makes it challenging to perform his job duties and complete daily tasks. He values his independence and feels frustrated when he has to rely on others for assistance.

User Scenario: David is working on a financial report on his computer. He begins to experience discomfort and pain in his hand after using the mouse for an hour. He remembers hearing about a new hand gesture recognition system that he could use instead of the mouse. He accesses the system and is able to navigate through the report using simple hand gestures. He feels relieved and grateful that he can complete his work without experiencing pain or discomfort. He decides to recommend the system to his colleagues who also experience dexterity issues.

Ideation

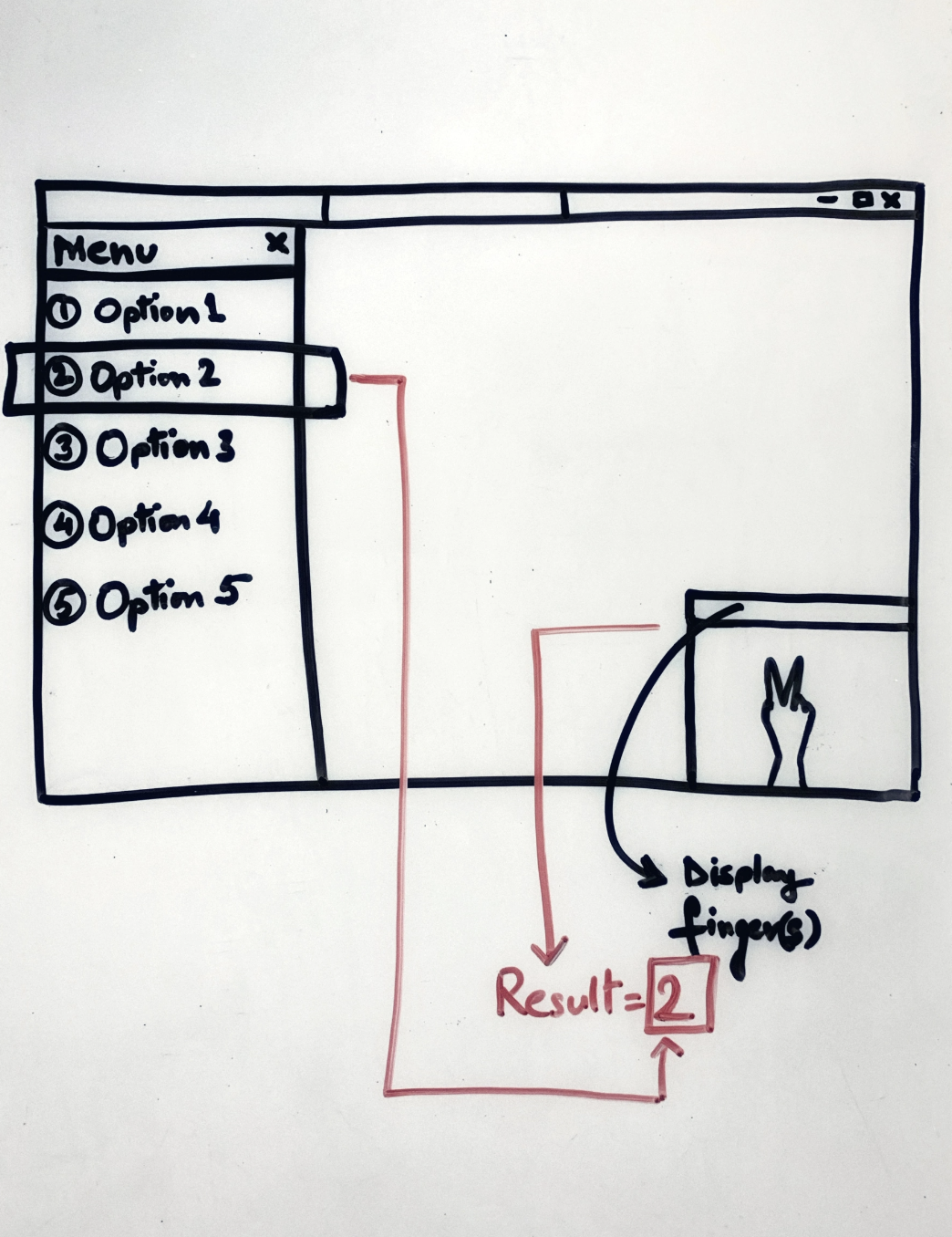

We brainstormed and sketched out scenarios and concepts to visualize the use of this algorithm.

Development

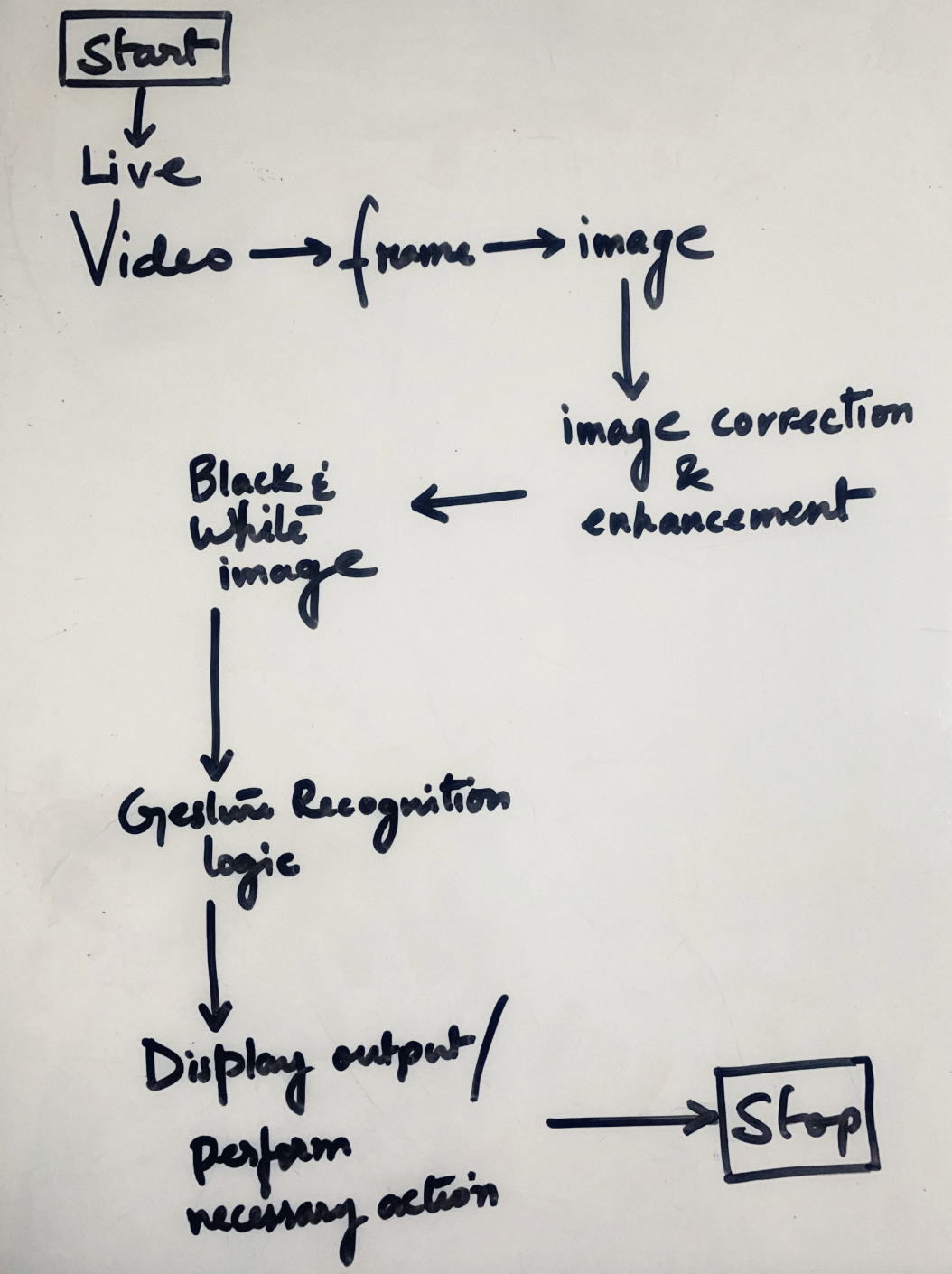

Captures individual frames in real time and applies image correction mechanism to it.

Detects the displayed hand in the corrected image and counts the number of fingers shown.

Gesture Types

The algorithm supports two distinguished gesture inputs. Online gestures, which can also be regarded as direct manipulations like scaling and rotating and, offline gestures, that are usually processed after the interaction is finished; e. g. a circle is drawn to activate a the opening of a camera application.

Offline gestures: Those gestures that are processed after the user interaction with the object. An example is the gesture to activate a menu.

Online gestures: Direct manipulation gestures. They are used to scale or rotate a tangible object.

Algorithm Design

This project is a combination of some very simple notions based on Image Segmentation and processing the extracted data from an image. The program receives input in the form of images which is processed in order to achieve the number of fingers shown.

Initially, the RGB image is converted into binary image. If the background is lighter than the hand color, conversion from RGB to binary will result in reversing the color of the hand and the background.

In the first case where the background is lighter, the binary image is converted into its compliment. After this, the image is searched for the first white point(A) in the figure below, from the top of the image. This point is the tip of the longest finger.

A standard threshold distance from this point(B) is marked and this part (PAQSBR) of the image is segmented out. Now the number of connected components with the specified connectivity is found out using ‘bwconncomp’. This gives the number of fingers shown by the user. This procedure is repetitively performed on rotating the binary image in all the four directions and the result is accepted where this value is maximum.

We performed unmoderated tests with 10 participants to test the accuracy of the algorithm

User Testing

Methodology

We recruited 5 participants who reported experiencing dexterity issues and difficulty using a mouse. Participants were asked to perform a series of tasks using the hand gesture recognition algorithm on a computer screen. They were given instructions on how to use the system and then asked to perform specific actions such as showing different number of fingers to the camera, zooming in and out, from different directions. After completing the tasks, participants were asked to fill out a questionnaire about their experience using the algorithm.

Participants

Participants for this study were recruited based on the following criteria:

Experience with dexterity issues that make using a mouse difficult

Aged between 18 and 65

Fluent in English

Basic computer skills

Results

Overall, the hand gesture recognition algorithm was found to be accurate (avg 90.4%) and easy to use. Participants reported a high level of satisfaction with the system and its performance. We noticed inaccurate results when the background of the participant showing the hand was noisy. Future work includes working on minimizing the execution time.